29 March 2016

Tuesday Crustie: Winning the war

I recently discovered the TV show Skin Wars on Hulu. It’s one of those, “Make some cool really fast” competition shows like FaceOff, Top Chef, or Project Runway. In this case, the topic is body painting. The show’s had two seasons, with a third starting up next month. But this was the last winning entry that clinched season two for Lana!

23 March 2016

The craziest recommendation form I have ever completed

As a professor, you write recommendations for student regularly. One came in this morning, so I started filling it out. First questions were pretty standard, asking you to rate students compared to others you’ce interacted with.

One struck me as oddly worded: something like, “Student will do the right thing when no one is looking.” Um... if this student does these things when no one is looking, how can anyone know?!

But then I got to the question (click to enlarge):

Options include “Astronaut,” “Nobel prize,” “US Supreme court justice.”

And you can pick “All that apply”! “I’ll be Secretary of State and run a pro sports team!” “I’ll be Fortune 500 CEO and an astronaut, becoming the first entrepreneur in space!” These are the kind of career ambitions you might have when you’re eight. But for evaluating university students? No. These achievements are so rare and capricious that it makes no sense to ask someone to say that a student is likely to achieve these.

I thought, “This is the craziest, least realistic question I have ever had to do on a scholarship recommendation.”

Then I saw the next question, and I had to throw out my previous record holder for craziest, least realistic question I’ve had to answer for a scholarship recommendation.

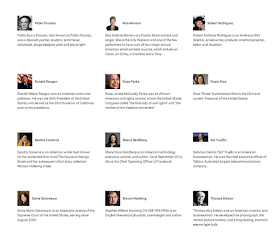

Options on the first screen include Barack Obama, Elon Musk, and Charles Darwin.

Options on second screen include Marie Curie, Mother Theresa, and Oprah Winfrey.

Options on third screen include Rosa Parks, Thomas Edison, and Rita Moreno.

There are some people in that list I would use if I wanted to diss a student. Some of the politicians come to mind.

The way this recommendation was going, I expected the next question to be, “What is this student’s mutant superpower?”

These questions are dumb. I get that a scholarship organization wants students to aim high and achieve and all, but geez. These are not realistic career expectations. And it’s not a helpful way for professors to try to characterize what they think the student’s good and bad qualities are.

One struck me as oddly worded: something like, “Student will do the right thing when no one is looking.” Um... if this student does these things when no one is looking, how can anyone know?!

But then I got to the question (click to enlarge):

Which of the following achievements may be applicable to your student's future: Select all that apply.

Options include “Astronaut,” “Nobel prize,” “US Supreme court justice.”

And you can pick “All that apply”! “I’ll be Secretary of State and run a pro sports team!” “I’ll be Fortune 500 CEO and an astronaut, becoming the first entrepreneur in space!” These are the kind of career ambitions you might have when you’re eight. But for evaluating university students? No. These achievements are so rare and capricious that it makes no sense to ask someone to say that a student is likely to achieve these.

I thought, “This is the craziest, least realistic question I have ever had to do on a scholarship recommendation.”

Then I saw the next question, and I had to throw out my previous record holder for craziest, least realistic question I’ve had to answer for a scholarship recommendation.

If you fast forward 10-20 years, who would these student most closely resemble?

Options on the first screen include Barack Obama, Elon Musk, and Charles Darwin.

Options on second screen include Marie Curie, Mother Theresa, and Oprah Winfrey.

Options on third screen include Rosa Parks, Thomas Edison, and Rita Moreno.

There are some people in that list I would use if I wanted to diss a student. Some of the politicians come to mind.

The way this recommendation was going, I expected the next question to be, “What is this student’s mutant superpower?”

These questions are dumb. I get that a scholarship organization wants students to aim high and achieve and all, but geez. These are not realistic career expectations. And it’s not a helpful way for professors to try to characterize what they think the student’s good and bad qualities are.

17 March 2016

A pre-print experiment: will anyone notice?

In late February, there was a lot of chatter on my Twitter feed from the #ASAPBio meeting, about using pre-prints in biology.This has been the accepted practice in physics for decades.

My previous experience with pre-prints was underwhelming. I’d rather have one definitive version of record. And I’d like the benefits of it being reviewed and edited before release. Besides, my research is so far from glamorous that I’m not convinced a pre-print makes a difference.

Following the ASAPbio meeting, I saw congratulatory tweets like this:

Randy Schekman strikes again: yet another #nobelpreprint - Richard Sever

Marty Chalfie on bioRxiv! That’s Nobel #2 today - Richard Sever

Yay, Hopi Hoekstra (@hopihoekstra) just published on @biorxivpreprint - Leslie Voshall

Similarly, a New York Times article on pre-prints that appeared several weeks later focused on the Nobel laureates. I admit I got annoyed by tweets and articles about Nobel winners and Ivy League professors and HHMI labs and established professors at major research universities using pre-prints. I wasn’t the only one:

I wish this article didn’t erase the biologists who have been posting to arXiv for years.

If pre-prints are going to become the norm in biology, they can’t just work for the established superstars. Pre-prints have to have benefits for the rank and file out there. It can’t just be “more work.”

For example, I think one of the reasons PLOS ONE was a success was that it provided benefits, not just for superstars, but for regular scientists doing solid but incremental work: it provided a venue that didn’t screen for importance. That was a huge change. In contrast, new journals that try to cater to science superstars by publishing “high impact” science (PLOS Biology or eLife and Science Advances), while not failures, have not taken off in the same was that PLOS ONE did.

I decided I would try an experiment.

I don’t do the most glamorous scientific research, but I do have a higher than average social media profile for a scientist. (I have more Twitter followers than my university does.) So I thought, “Let’s put up a pre-print on biorXiv and see if anyone comments.”

I spent the better part of a morning (Thursday, 25 February 2016) uploading the pre-print. Since I had seen people whinging about “put your figures in the main body of the text, not at the end of the paper,” I had to spend time reformatting the manuscript so it looked kind of nice. I also made sure my Twitter handle was on the front page, to make it easy for people to let me know they’d seen my paper.

I was a little annoyed that I had to go through one of those clunky manuscript submission systems that I do for journals. I had to take a few stabs at converting the document into a PDF. biorXiv has a built-in PDF conversion built into it, but the results were unsatisfactory. There were several image conversion problems. One picture looked like it came out of a printer running low on ink. Lines on some of the graphs looked like they had been dipped in chocolate. Converting the file to PDF on my desktop looked much better. I uploaded that, only to find that even that had to go through a PDF conversion process that chewed up some more time.

biorXiv preprints are vetted by actual people, so I waited a few hours (three hours and thirty-nine minutes) to get back a confirmation email. It was up on biorXiv within a couple of hours. All in all, pretty quick.

I updated the “Non-peer reviewed papers” section of my home page. I put a little “New!” icon next to the link and everything. But I didn’t go out and promote it. I deliberately didn’t check it on biorXiv to ensure that my own views wouldn’t get counted. Because the point was to see whether anyone would notice without active promotion.

I waited. I wasn’t sure how long to wait.

After a day, my article had an Altmetric score of 1. biorXivpreprints and three other accounts that looked like bots tweeted the paper, apparently because they trawl and tweet every biorXiv paper. (By the way, “Bat_papers” Twitter account? There are no bats in my paper.) The four Twitter accounts combined had fewer followers than me. Looking at the Altmetrics page did remind me, however, that I need to make the title of my paper more Twitter friendly. It was way longer than 140 characters.

Four days later (29 February 2016), I got a Google Scholar alert in my inbox alerting me to the presence of my pre-print. Again, this was an automated response. That was another way people could have found my paper.

Three weeks has gone by now. And that’s all the action I’ve seen on the pre-print. Even with a New York Times article brought attention to pre-prints and biorXiv, nobody noticed mine. Instead, the attention is focused on the “established labs,” as Arturo Casadevall calls them. The cool kids.

Three weeks has gone by now. And that’s all the action I’ve seen on the pre-print. Even with a New York Times article brought attention to pre-prints and biorXiv, nobody noticed mine. Instead, the attention is focused on the “established labs,” as Arturo Casadevall calls them. The cool kids.I learned that for rank and file biologists, posting work on pre-prints is probably just another task to do whose tangible rewards compared to a journal article are “few to none.” Like Kim Kardashian posting a selfie, pre-prints will probably only get attention if a person who is already famous does it.

Update, 18 March 2016: This post has been getting quite a bit of interest (thank you!), and I think as a result, the Altmetric score on the article reference herein has jumped from 1 to 11 (though mostly due to being included in this blog post).

Related posts

The science of asking

Mission creep in scientific publishing

Reference

Faulkes Z. 2016. The long-term sand crab study: phenology, geographic size variation, and a rare new colour morph in Lepidopa benedicti (Decapoda: Albuneidae) biorXiv. http://dx.doi.org/10.1101/041376

External links

The selfish scientist’s guide to preprint posting

Handful of Biologists Went Rogue and Published Directly to Internet

Taking the online medicine (The Economist article)

Picture from here.

15 March 2016

Tuesday Crustie: Paddy

I love this design, although since the only native Irish crayfish is the what-clawed crayfish, I kind of wish this guy had some patches of white on his claws.

This design is by Jen, who has this page with a whole whacking big stack of crustacean design! I suppose my only complaint is that the “lobster” and “crawfish” look mighty similar to one another.

08 March 2016

Fewer shots, more diversity?

The National Science Foundation just announced changes to its Graduate Research Fellowship Program (GRFP) that limits the number of applications for grad students to one. The NSF lists several reasons for this:

The National Science Foundation just announced changes to its Graduate Research Fellowship Program (GRFP) that limits the number of applications for grad students to one. The NSF lists several reasons for this:1) result in a higher success rate for GRFP applicants

You know how else you could increase success rate? Give more awards. But the political reality is that the NSF budget is stagnant, so the best they can do is increase in success rate at the cost of limiting chances to apply.

2) increase the diversity of the total pool of individuals and of institutions from which applications may come through an increase in the number of individuals applying before they are admitted into graduate programs,

You know what else could increase the diversity of applicants? Instead of changing the applicants, change the reviewers. Change the criterion those new reviewers are judging the awards by. Let’s not forget that in the past, GRFPs have been criticized for giving awards to doctoral students in a limited number of institutions. If the review process is biased, tweaks to the applicant pool won’t create more diversity, because they’ll be going through the same filter.

And just because I run a master’s program, let me call out that in the past, only 3.5% of awards went to students in master’s programs (page 3, footnote 3 in this report). Why do you hate master’s students, NSF?!

Terry McGlynn notes, however:

Shifting the emphasis of @NSF GRFPs to undergrads will increase representation because it will recruit people. If you give a GRFP to someone already in grad school, odds are they would be successful anyway. GRFPs can get undergrads into good labs.

This is a good point, but if undergraduates are still competing against graduates, and there’s still a bias towards the advanced doctoral students as the “safer bet,” the change in the applicant pool might not achieve the desired result in diversity. This might be an argument for splitting the program: one for undergraduates transitioning to graduate programs, one for graduate students in programs.

Finally, NSF’s last reason for the change.

3) ease the workload burden for applicants, reference writers, and reviewers.

I am glad to see this listed as a reason, because it is honest. It’s a lot of hard work to review applications, and you have to keep it to a reasonable level. I think it might be even more honest if it was listed as reason #1 instead of reason #3.

External links

NSF Graduate Fellowships are a part of the problem

How the NSF Graduate Research Fellowship is slowly turning into a dissertation grant

Evaluation of the National Science Foundation’s Graduate Research Fellowship Program final report

NSF makes its graduate fellowships more accessible

Picture from here.

07 March 2016

A light touch or hands on? Monday morning quarterbacking a retraction

Things moved fast last week. I blogged about a paper with strange references to “The Creator”. Within hours, the story exploded on social media (the main hashtag was #HandOfGod on Twitter), and even garnered some tech news coverage before the paper was retracted. I was teaching and traveling when a lot of this went down, so didn’t have much change to follow up until now.

Things moved fast last week. I blogged about a paper with strange references to “The Creator”. Within hours, the story exploded on social media (the main hashtag was #HandOfGod on Twitter), and even garnered some tech news coverage before the paper was retracted. I was teaching and traveling when a lot of this went down, so didn’t have much change to follow up until now.PLOS ONE deserves credit for not dawdling on this. A concern was identified, and by the next day, they arrived at a decision.

PLOS ONE loses the points they just earned, however, for the lack of transparency in their retraction notice.

Our internal review and the advice we have received have confirmed the concerns about the article and revealed that the peer review process did not adequately evaluate several aspects of the work.

Which means... what, exactly? PLOS ONE claims it will publish any technically sound science. What’s the basis for retraction, then? What about the paper is not technically sound? You can’t find it in the retraction notice. I haven’t seen any criticisms about the paper’s scientific content beyond, “It’s not news; we know hands grab things.” But no comments like, “The wrong statistic was used,” or “The authors didn’t account for this uncontrolled variable.”

The retraction notice says the editorial process wasn’t very good, but that should not be the basis for a retraction. If an editor screwed up, a competent paper shouldn’t suffer. A good result shouldn’t be punished for a bad process.

PLOS staffer David Knutsen wrote more for this blog post, but we still only get vague references to:

(T)he quality of the paper in general, the rationale of the study and its presentation relative to existing literature(.)

It still doesn’t answer what technical flaw the paper had. PLOS ONE comes out looking like it might have swatted a flay with a sledgehammer, and facing accusations of racism.

This situation is not a bug in the PLOS ONE system: it’s a feature. Ph.D. Comics published this cartoon about PLOS ONE back in 2009, which takes on new relevance:

“Let the world decide what is important.” PLOS ONE was designed from the get go to:

- Have a light editorial touch. “Is it technically competent? Then publish.” The high volume of papers (PLOS ONE is the biggest single scientific journal in the world) pretty much ensures you cannot have the same level of editing that happens at other journals.

- Encourage post-publication peer review.

When you combine those two things, things like the “hand of god” paper are practically inevitable. The question becomes whether the benefits of light editing (authors having more direct and potentially faster communication of what they want) outweighs the potential downsides (disproportionate criticism for obvious things that could have been fixed).

Related posts

A handy new Intelligent Design paper

External links

Follow-up notification from PLoS staff

A Science Journal Invokes ‘the Creator,’ and Science Pushes Back

Hand of God paper retracted: PLOS ONE “could not stand by the pre-publication assessment”

The “Creator” paper, Post-pub Peer Review, and Racism Among Scientists

This Paper Should Not Have Been Retracted: #HandofGod highlights the worst aspects of science twitter

02 March 2016

A handy new Intelligent Design paper

Back on January 5, PLOS ONE published an Intelligent Design paper containing numerous references to “the Creator.” Judging from the consistent use of capitals, this would mean the Judaeo-Christian God.

I have no idea how this went unnoticed by science social media. The paper didn’t bury this, but announced it right in the abstract:

The Introduction marks it as an Intelligent Design paper:

And again in the discussion:

Judging from the rest of the text,the paper probably has other credible information, which is probably why PLOS ONE published it. PLOS ONE reviews for technical competence, not importance.

From my point of view, these three sentences should have been removed as a condition of publication. Random references to a “Creator” do not advance the argument of the paper. It is not at all clear what predictions flow from the “Creator” hypothesis. The authors do not support the “Creator” hypothesis with relevant literature from the biomechanical field. In short, references to a “Creator” mark the paper as not competent science, and thus unfit for publication under PLOS ONE’s standards.

This might be one of the few times that a peer-reviewed journal has published an Intelligent Design paper. The best known was a paper by Stephen Meyer in the Proceedings of the Biological Society of Washington, over ten years ago. It was much more explicit about its Intelligent Design aspirations than this one, and it got in by... suspect methods of dodging peer review.

PLOS ONE made a mistake. It’s not a big one, as far as mistake go. Reasonable people can disagree over how big a mistake it is. It’s probably not as bad as running an entire journal devoted to homeopathy (say). It’s not surprising that the biggest journal in the world, handling thousands of papers annually, makes mistakes. They’re far from the only journal to have published texts with religious overtones.

It’s an embarrassing mistake because it would have been so easy to fix. Change three sentences, and this paper would be uncontested. Good editing is valuable, not disposable.

Hat tip to James McInerney and Jeffrey Beall.

Update: Oh yeah, this story is now burning up my Twitter feed. PLOS ONE tweeted that they are looking into this paper.

Update: Grant pointed out that even one of the paper’s own authors doesn’t seem to buy into their references to “the Creator”:

Update: Terry McGlynn notes that the lab page of one of the authors doesn’t appear to have any overt religious overtones. Several people are suggesting that this is a mistranslation. I’m not convinced (nor are others). Even if it is “just” a mistranslation, the journal doesn’t come out looking much better, because it highlights weak editing.

The hashtag for this has been deemed #HandOfGod.

Update, 1:12 pm: PLOS ONE writes:

Reference

Liu M-J, Xiong C-H, Xiong L, Huang X-L. 2016. Biomechanical characteristics of hand coordination in grasping activities of daily living. PLOS ONE 11(1): e0146193. http://dx.doi.org/10.1371/journal.pone.0146193

External links

The shroud of retraction: Virology Journal withdraws paper about whether Christ cured a woman with flu

Hands are the “proper design by the Creator,” PLOS ONE paper suggests

I have no idea how this went unnoticed by science social media. The paper didn’t bury this, but announced it right in the abstract:

The explicit functional link indicates that the biomechanical characteristic of tendinous connective architecture between muscles and articulations is the proper design by the Creator to perform a multitude of daily tasks in a comfortable way.

The Introduction marks it as an Intelligent Design paper:

Hand coordination should indicate the mystery of the Creator’s invention.

And again in the discussion:

In conclusion, our study can improve the understanding of the human hand and confirm that the mechanical architecture is the proper design by the Creator for dexterous performance of numerous functions following the evolutionary remodeling of the ancestral hand for millions of years.

Judging from the rest of the text,the paper probably has other credible information, which is probably why PLOS ONE published it. PLOS ONE reviews for technical competence, not importance.

From my point of view, these three sentences should have been removed as a condition of publication. Random references to a “Creator” do not advance the argument of the paper. It is not at all clear what predictions flow from the “Creator” hypothesis. The authors do not support the “Creator” hypothesis with relevant literature from the biomechanical field. In short, references to a “Creator” mark the paper as not competent science, and thus unfit for publication under PLOS ONE’s standards.

This might be one of the few times that a peer-reviewed journal has published an Intelligent Design paper. The best known was a paper by Stephen Meyer in the Proceedings of the Biological Society of Washington, over ten years ago. It was much more explicit about its Intelligent Design aspirations than this one, and it got in by... suspect methods of dodging peer review.

PLOS ONE made a mistake. It’s not a big one, as far as mistake go. Reasonable people can disagree over how big a mistake it is. It’s probably not as bad as running an entire journal devoted to homeopathy (say). It’s not surprising that the biggest journal in the world, handling thousands of papers annually, makes mistakes. They’re far from the only journal to have published texts with religious overtones.

It’s an embarrassing mistake because it would have been so easy to fix. Change three sentences, and this paper would be uncontested. Good editing is valuable, not disposable.

Hat tip to James McInerney and Jeffrey Beall.

Update: Oh yeah, this story is now burning up my Twitter feed. PLOS ONE tweeted that they are looking into this paper.

Update: Grant pointed out that even one of the paper’s own authors doesn’t seem to buy into their references to “the Creator”:

Update: Terry McGlynn notes that the lab page of one of the authors doesn’t appear to have any overt religious overtones. Several people are suggesting that this is a mistranslation. I’m not convinced (nor are others). Even if it is “just” a mistranslation, the journal doesn’t come out looking much better, because it highlights weak editing.

The hashtag for this has been deemed #HandOfGod.

Update, 1:12 pm: PLOS ONE writes:

The PLOS ONE editors apologize that this language was not addressed internally or by the Academic Editor during the evaluation of the manuscript.

Reference

Liu M-J, Xiong C-H, Xiong L, Huang X-L. 2016. Biomechanical characteristics of hand coordination in grasping activities of daily living. PLOS ONE 11(1): e0146193. http://dx.doi.org/10.1371/journal.pone.0146193

External links

The shroud of retraction: Virology Journal withdraws paper about whether Christ cured a woman with flu

Hands are the “proper design by the Creator,” PLOS ONE paper suggests

01 March 2016

Tuesday Crusty: Fuzzy vizzie

Jessica Waller took this gorgeous picture of a juvenile American lobster (Homarus americanus). It’s the People’s Choice award winner in this year’s Vizzies for excellence in scientific visualization!

Good photos do not come easily. The page notes:

This image of a live three-week-old specimen was one of thousands Waller took.

When this went around on Facebook, friend of the blog Al Dove mentioned he got an honorable mention in a different competition for a picture of these beasts, but even younger:

External links

2016 Vizzies