When UTRGV was forming, I blogged a lot about the choice of a name for a new mascot. What we got was... something that not a lot of people were happy with at first. In the years since, I guess peopel have made peace with it, because I haven’t heard much about the name since then.

Well, we’ve waited four, almost five years for a mascot to go with the name, and today we got it.

As far as I know, he doesn’t have a name. Just “Vaquero.”

The website lists what each feature represents, although I think a lot of this stuff is so far beyond subtle that nobody would ever guess what it is supposed to mean.

Scarf: The scarf features the half-rider logo against an orange background. Traditionally, the scarves were worn to protect against the sun, wind and dirt. Today, the scarf is worn to represent working Vaqueros.

Vest: The vest features the UTRGV Athletics symbol of the “V” on the buttons, which match the symbol on the back of the vest representing school spirit and pride.

Gloves: The gray and orange gloves symbolize strength and power. They represent Vaqueros building the future of the region and Texas.

Shirt: The white shirt represents the beginning of UTRGV, which was built through hard work and determination. (Couldn’t it just represent, I don’t know, cleanliness? - ZF)

Boots: A modern style of the classic cowboy boot, these feature elements that are unique to the region and UTRGV. The blue stitching along the boot represents the flowing Rio Grande River which signifies the ever-changing growth in the region and connects the U.S. to Mexico.

The boot handles showcase three stars. The blue star represents legacy institution UT Brownsville, the green star represents legacy institution UT-Pan American and the orange star represents the union of both to create UTRGV.

I don’t know. I was never a fan of the “Vaquero” name and this does not win me over. I just feel like the guy could do with a shave. I will be interested to see if this re-ignites the debate about the name...

Update: Apparently the mascot’s slogan is “V’s up!” Which doesn’t make any sense! And demonstrates questionable apostrophe usage!

External links

Welcome your Vaquero

12 June 2019

11 June 2019

Tuesday Crustie: For your crustacean GIF needs

Been meaning to make GIFs of a sand crab digging, suitable for social media sharing, for a while.

Here’s a serious one.

And here’s a fun one.

Here’s a serious one.

And here’s a fun one.

10 June 2019

Journal shopping in reverse: unethical, impolite, or expected?

A recent article describes a practice unknown to me. Some authors submit papers for review, get positive reviews, then withdraw it if the reviews are positive and try again in another “higher impact” or “better” journal.

A recent article describes a practice unknown to me. Some authors submit papers for review, get positive reviews, then withdraw it if the reviews are positive and try again in another “higher impact” or “better” journal.It is entirely normal for authors to go “journal shopping” when reviews are bad: submit the article,and if the reviewers don’t like it, resubmit it to another. But this is the first time I’d heard of this process going the other way. It would never even occur to me to do this.

Nancy Gough tweeted her agreement with this article, and said that this behaviour was unethical. And she got an earful. Frankly, online reaction to this article seemed to be summed up as, “I know you are, but what am I?”

A lot of the reaction that I saw (though I didn’t see all of it) seemed to be, “Journals exploit us, so we should exploit journals!” or “Journals should pay us for our time.” This seemed to be a directed at for profit publishers, but people seemed to be lumping journals from for profit publishers and non profit journals from scientific societies together.

The “People in glass houses should not throw stones” have a point, but I’m not sure it addresses the actual issue. Publishers didn’t create the norms of refereeing and peer review. That was us, guys. Scientists. We created the idea that there are research communities. We created the idea that reviewing papers is a service to that community.

I don’t know that I would call “withdraw after positive reviews and resubmit to a journal perceived as better” unethical, but I think it’s a dick move.

Like asking someone to a dance and then never dancing with them. Sure, there’s no rules against it, but it’s not too much to expect a little reciprocity. The “Me first, me only” attitude drags.

Since the whole behaviour is “glam humping” and impact factor chasing, this seems a good time to link out to a couple of articles that point out the many ways that impact factor is deeply flawed: here and here.

I’ve written before about grumpiness about peer review being due in part to an eroded sense of research community. I guess people don’t want to see journals as part of the research community, but they are.

Related posts

A sense of community

External links

08 June 2019

Shoot the hostage, preprint edition

It takes a certain kind of academic who refuses to review papers. Not because of lack of expertise, a lack of time, or a conflict of interest, but because you don’t like how other authors have decided to disseminate their results.

This isn’t a new tactic, and I’ve made my thoughts on it known. But this takes review refusal to a new level. This individual isn’t just informing the editor he won’t review, but chases down the authors to tell them how to do their job.

I’m sure the emails are meant as helpful, and may be well crafted and polite. Still. Does advocating for preprints have to be done right then?

I see reviewing as service, as something you do to help make your research community function, and to build trust and reciprocity. I don’t think reviewing as an opportunity to chastise your colleagues for their publication decisions. But I guess some people are unconcerned whether they are seen as “generous” in their community or... something else.

And I am still struggling to work out if there are any conditions where I think it would genuinely be worth it to say refuse to review.

Additional, 9 June 2019: I ran a poll on Twitter. 18% described this as “Collegial peer pressure.” The other 82% percent described it as “Asinine interference.”

Related posts

Shoot the hostage

I’ve been declining reviews for manuscripts that aren’t posted as preprints for the last couple of months (I get about 1-2 requests to review per week). I’ve been emailing the authors for every paper I decline to suggest posting.

This isn’t a new tactic, and I’ve made my thoughts on it known. But this takes review refusal to a new level. This individual isn’t just informing the editor he won’t review, but chases down the authors to tell them how to do their job.

I’m sure the emails are meant as helpful, and may be well crafted and polite. Still. Does advocating for preprints have to be done right then?

I see reviewing as service, as something you do to help make your research community function, and to build trust and reciprocity. I don’t think reviewing as an opportunity to chastise your colleagues for their publication decisions. But I guess some people are unconcerned whether they are seen as “generous” in their community or... something else.

And I am still struggling to work out if there are any conditions where I think it would genuinely be worth it to say refuse to review.

Additional, 9 June 2019: I ran a poll on Twitter. 18% described this as “Collegial peer pressure.” The other 82% percent described it as “Asinine interference.”

Related posts

Shoot the hostage

07 June 2019

Graylists for academic publishing

Lots of academics are upset by bad journals, which are often labelled “predatory.” This is maybe not a great name for them, because it implies people publishing in them are unwilling victims, and we know that a lot are not.

Lots of scientists want guidance about which journals are credible and which are not. And for the last few years, there’s been a lot of interests in lists of journals. Blacklists spell out all the bad journals, whitelists give all the good ones.

The desire for lists might seem strange if you’re looking at the problem from the point of view of an author. You know what journals you read, what journals your colleagues publish in, and so on. But part of the desire for lists comes when you have to evaluate journals as part of looking at someone else’s work, like when you’re on a tenure and promotion committee.

But a new paper shows it ain’t that simple.

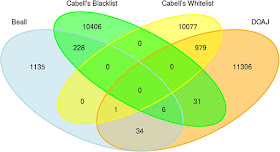

Strinzel and colleagues compared two blacklists and two whitelists, and found some journals appeared on both the lists.

There are some obvious problems with this analysis. “Beall” is Jeffrey Beall’s blacklist, which he no longer maintains, so it is out of date. Beall’s list was also the opinion of just one person. (It’s indicative of the demand for simple lists that one put out by a single person, with little transparency, could gain so much credibility.)

One blacklist and one whitelist are from the same commercial source (Cabell), so they are not independent samples. It would be surprising if the same sources listed a journal on both its whitelist and blacklist!

The paper includes a Venn diagram for publishers, too, which shows similar results (though there is a published on both Cabell’s lists).

This is kind of like I expected. And really, this should be yesterday’s news. Let’s remember the journal Homeopathy is put out by an established, recognized academic publisher (Elsevier), indexed in Web of Science, and indexed PubMed. It’s a bad journal on a nonexistent topic that was somehow “whitelisted” by multiple services that claimed to be vetting what they index.

Academic publishing is a complex field. We should not expect all journals to fall cleanly into two easily recognizable categories of “Good guys” and “Bad guys” – no matter how much we would like it to be that easy.

It’s always surprising to me that academics, who will nuance themselves into oblivion on their own research, so badly want “If / then” binary solutions to publishing and career advancement.

If you’re going to have blacklists and whitelists, you should have graylists, too. There are going to be journals that have some problematic practices but that are put out by people with no ill intent (unlike “predatory” journals which deliberately misrepresent themselves).

Reference

M Strinzel, Severin A, Milzow K, Egger M. 2019. Blacklists and whitelists to tackle predatory publishing: A cross-sectional comparison and thematic analysis. mBio 10(3): e00411-00419. https://doi.org/10.1128/mBio.00411-19.

Related posts

Lots of scientists want guidance about which journals are credible and which are not. And for the last few years, there’s been a lot of interests in lists of journals. Blacklists spell out all the bad journals, whitelists give all the good ones.

The desire for lists might seem strange if you’re looking at the problem from the point of view of an author. You know what journals you read, what journals your colleagues publish in, and so on. But part of the desire for lists comes when you have to evaluate journals as part of looking at someone else’s work, like when you’re on a tenure and promotion committee.

But a new paper shows it ain’t that simple.

Strinzel and colleagues compared two blacklists and two whitelists, and found some journals appeared on both the lists.

There are some obvious problems with this analysis. “Beall” is Jeffrey Beall’s blacklist, which he no longer maintains, so it is out of date. Beall’s list was also the opinion of just one person. (It’s indicative of the demand for simple lists that one put out by a single person, with little transparency, could gain so much credibility.)

One blacklist and one whitelist are from the same commercial source (Cabell), so they are not independent samples. It would be surprising if the same sources listed a journal on both its whitelist and blacklist!

The paper includes a Venn diagram for publishers, too, which shows similar results (though there is a published on both Cabell’s lists).

This is kind of like I expected. And really, this should be yesterday’s news. Let’s remember the journal Homeopathy is put out by an established, recognized academic publisher (Elsevier), indexed in Web of Science, and indexed PubMed. It’s a bad journal on a nonexistent topic that was somehow “whitelisted” by multiple services that claimed to be vetting what they index.

Academic publishing is a complex field. We should not expect all journals to fall cleanly into two easily recognizable categories of “Good guys” and “Bad guys” – no matter how much we would like it to be that easy.

It’s always surprising to me that academics, who will nuance themselves into oblivion on their own research, so badly want “If / then” binary solutions to publishing and career advancement.

If you’re going to have blacklists and whitelists, you should have graylists, too. There are going to be journals that have some problematic practices but that are put out by people with no ill intent (unlike “predatory” journals which deliberately misrepresent themselves).

Reference

M Strinzel, Severin A, Milzow K, Egger M. 2019. Blacklists and whitelists to tackle predatory publishing: A cross-sectional comparison and thematic analysis. mBio 10(3): e00411-00419. https://doi.org/10.1128/mBio.00411-19.

Related posts

06 June 2019

How do you know if the science is good? Wait 50 years

A common question among non-scientists is how to tell what science you can trust. I think the best answer is, unfortunately, the least practical one.

Wait.

Emphasis added. I must have heard Clarke say that on television decades ago, and it stuck with me all this time. I remembered it a little different. I thought it was, “Scientists generally get to the bottom of things in about 50 years, if there’s any bottom to be gotten to.” I finally got around to digging up the exact quote today.

Related quote:

Wait.

There’s one peculiarity that distinguishes parascience from science. In orthodox science, it’s very rare for a controversy to last more than, a generation; 50 years at the outside. Yet this is exactly what’s happened with the paranormal, which is the best possible proof that most of it is rubbish. It never takes that long to establish the facts – when there are some facts.

— Arthur C. Clarke, 10 July 1985, “Strange Powers: The Verdict,” Arthur C. Clarke’s World of Strange Powers

Emphasis added. I must have heard Clarke say that on television decades ago, and it stuck with me all this time. I remembered it a little different. I thought it was, “Scientists generally get to the bottom of things in about 50 years, if there’s any bottom to be gotten to.” I finally got around to digging up the exact quote today.

Related quote:

One of the things that should always be asked about scientific evidence is, how old is it? It’s like wine. If the science about climate change were only a few years old, I’d be a skeptic, too.

— Naomi Oreskes, quoted by Justin Gillis, “Naomi Oreskes, a Lightning Rod in a Changing Climate” 15 June 2015, The New York Times